OpenTelemetry Collector Service

OTLP HTTP Exporter

In the Exporters section of the workshop, we configured the otlphttp exporter to send metrics to Splunk Observability Cloud. We now need to enable this under the metrics pipeline.

Update the exporters section to include otlphttp/splunk under the metrics pipeline:

service:

pipelines:

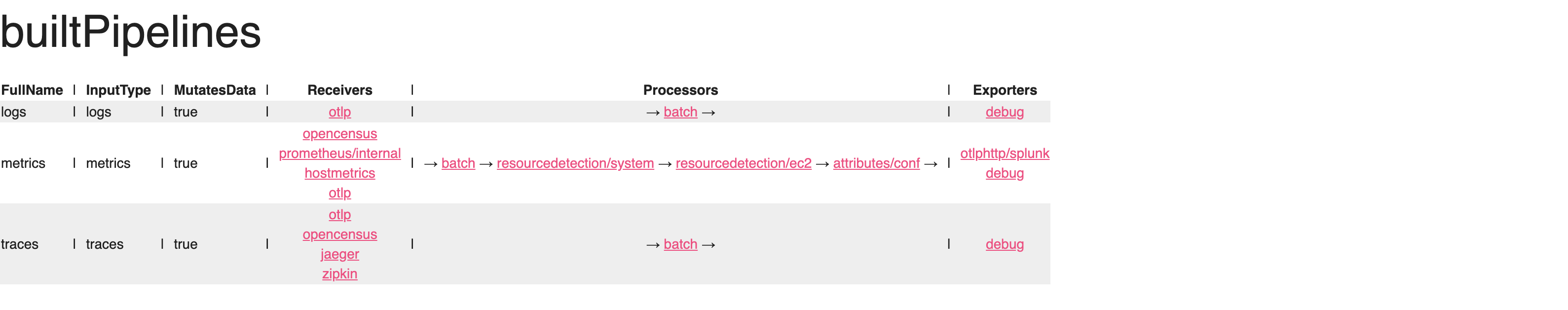

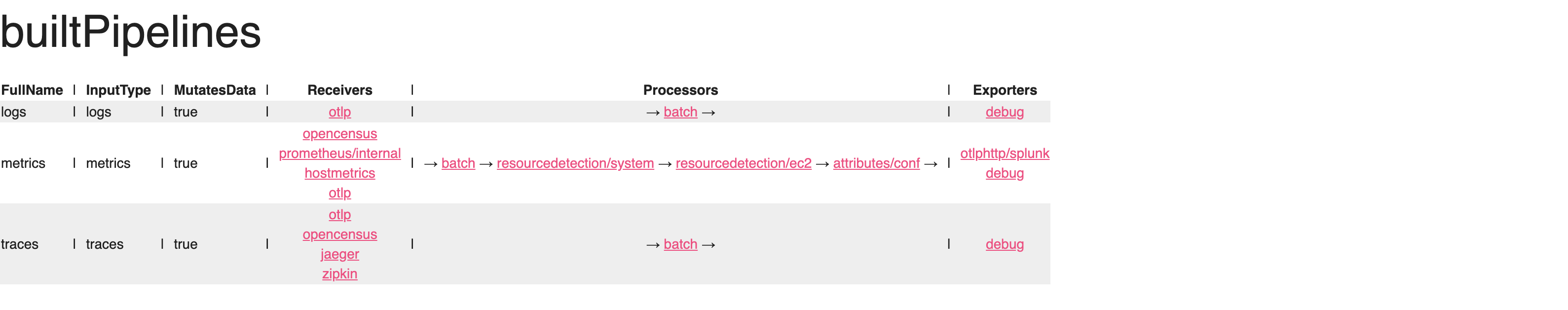

traces:

receivers: [otlp, opencensus, jaeger, zipkin]

processors: [batch]

exporters: [debug]

metrics:

receivers: [hostmetrics, otlp, opencensus, prometheus/internal]

processors: [batch, resourcedetection/system, resourcedetection/ec2, attributes/conf]

exporters: [debug, otlphttp/splunk]Final configuration

Tip

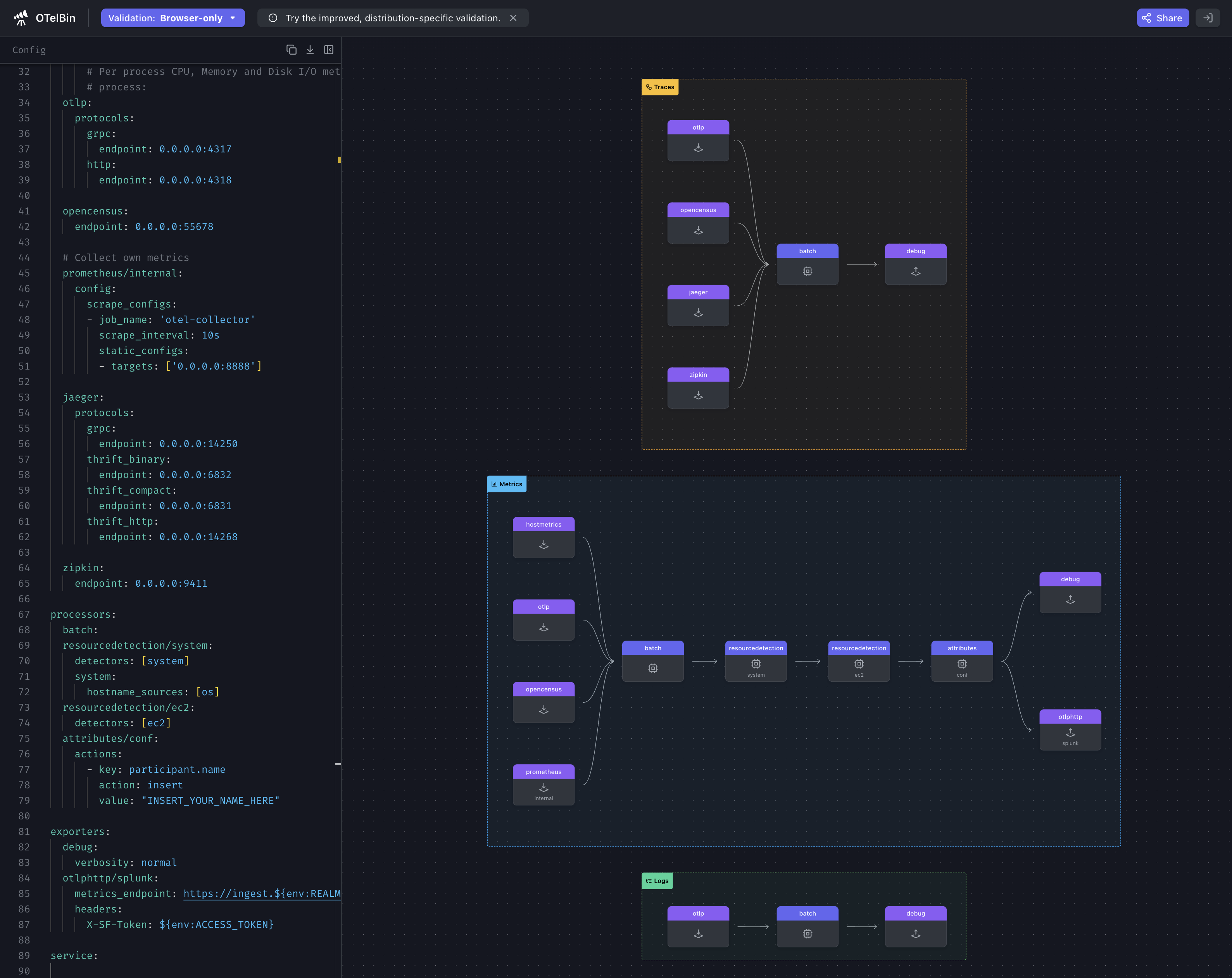

It is recommended that you validate your configuration file before restarting the collector. You can do this by pasting the contents of your config.yaml file into the OTelBin Configuration Validator tool.

Now that we have a working configuration, let’s start the collector and then check to see what zPages is reporting.

otelcol-contrib --config=file:/etc/otelcol-contrib/config.yamlOpen up zPages in your browser: http://localhost:55679/debug/pipelinez (change localhost to reflect your own environment).