Deduplicate Notable Events¶

Throttle Alerts Which Have Already Been Reviewed or Fired

Because Risk Notables look at a period of time, it is common for a risk_object to keep creating notables as additional (and even duplicate) events roll in, as well as when events fall off as the time period moves forward. Additionally, different Risk Incident Rules could be firing on the same risk_object with the same events but create new Risk Notables. It is difficult to get around this with throttling, so here are some methods to deduplicate notables.

Navigation¶

Here are two methods for Deduplicating Notable Events:

| - | Skill Level | Pros | Cons |

|---|---|---|---|

| Method I | Intermediate | Deduplicates on front and back end | More setup time |

| Method II | Beginner | Easy to get started with | Only deduplicates on back end |

Method I¶

We'll use a Saved Search to store each Risk Notable's risk events and our analyst's status decision as cross-reference for new notables. Altogether new events will still fire, but repeated events from the same source will not. This also takes care of duplicate notables on the back end as events roll off of our search window.

KEEP IN MIND

Edits to the Incident Review - Main search may be replaced on updates to Enterprise Security; requiring you to make this minor edit again to regain this functionality. Ensure you have a step in your relevant process to check this search after an update.

1. Create a Truth Table¶

This method is described in Stuart McIntosh's 2019 .conf Talk (about 9m10s in), and we're going to create a similar lookup table. You can either download and import that file yourself, or create something like this in the Lookup Editor app:

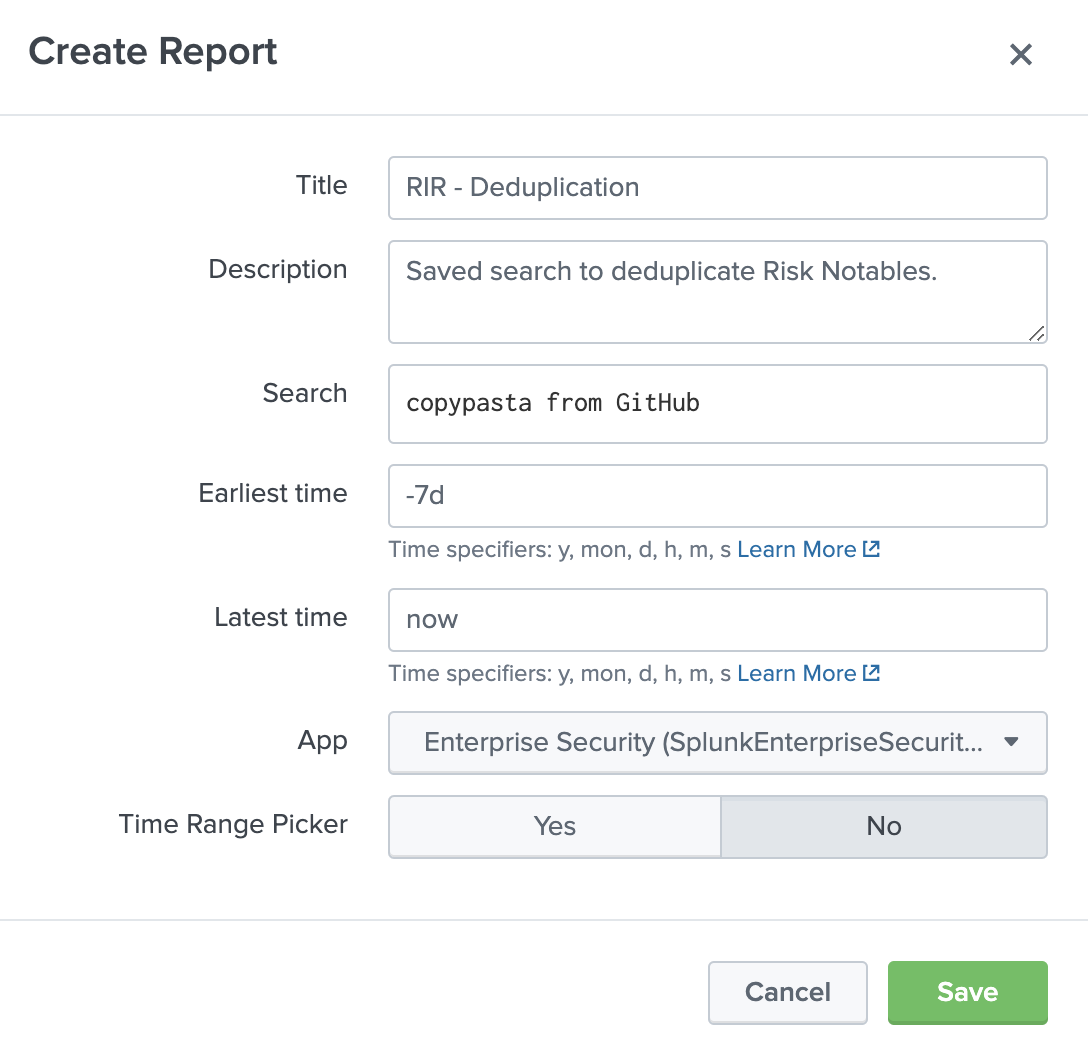

2. Create a Saved Search¶

Then we'll create a Saved Search which runs relatively frequently to store notable data and statuses.

- Navigate to Settings -> Searches, reports, and alerts.

- Select "New Report" in the top right.

Here is a sample to replicate

previousStatususes the default ES status label "Closed".

In the SPL for previousStatus above, I used the default ES status label "Closed" as our only nonmalicious status. You'll have to make sure to use status labels which are relevant for your Incident Review settings. "Malicious" is used as the fallback status just in case, but you may want to differentiate "New" or unmatched statuses as something else for audit purposes; just make sure to create relevant matches in your truth table.

I recommend copying the alert column from malicious events

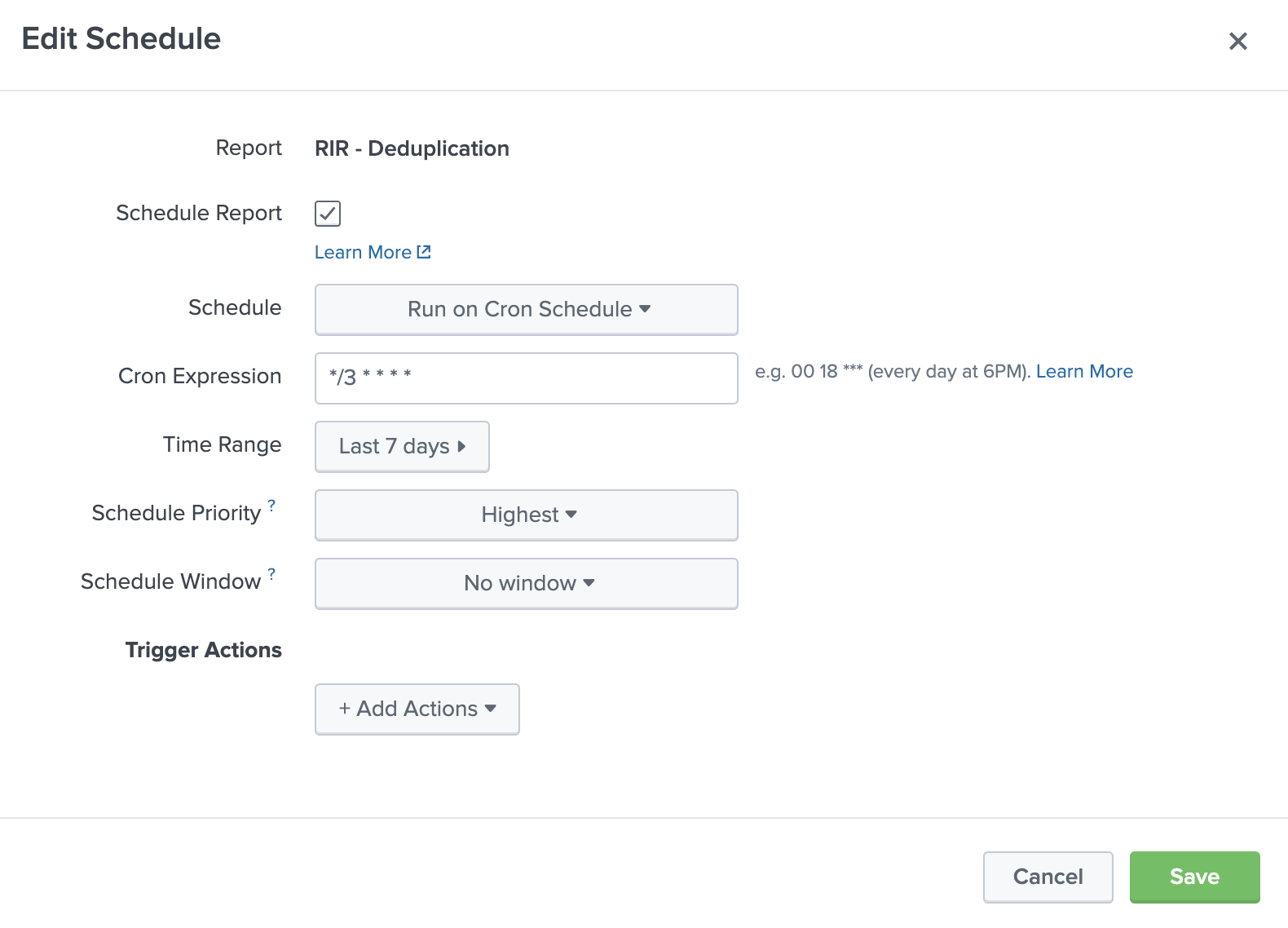

Schedule the Saved Search¶

Now find the search in this menu, click *Edit -> Edit Schedule* and try these settings:

- Schedule: Run on Cron Schedule

- Cron Expression:

*/3 * * * * - Time Range: Last 7 days

- Schedule Priority: Highest

- Schedule Window: No window

I made this search pretty lean, so running it every three minutes should work pretty well; I also decided to only look back seven days as this lookup could balloon in size and cause bundle replication issues. You probably want to stagger your Risk Incident Rule cron schedules by one minute more than this one so they don't fire on the same risk_object with the same risk events.

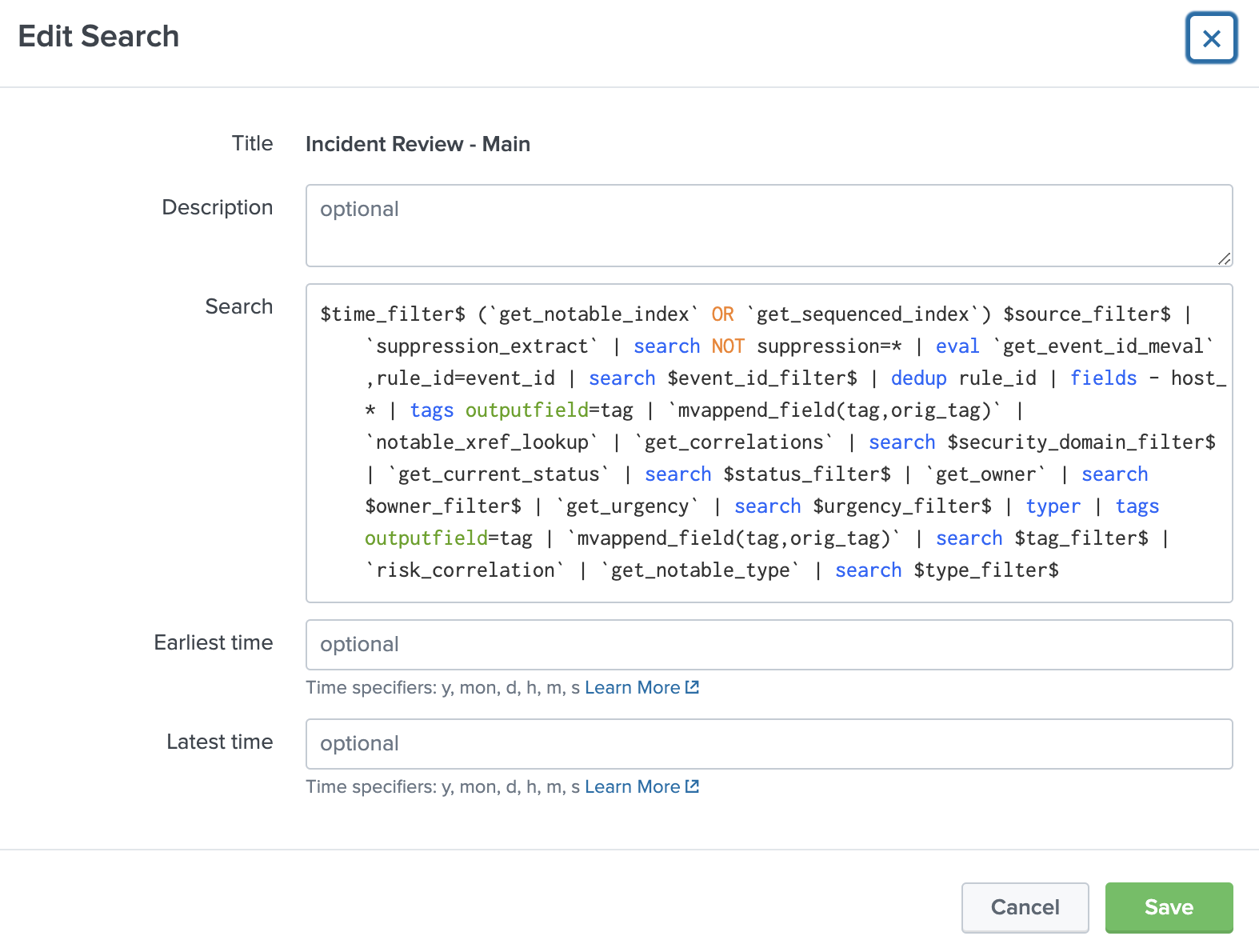

3. Deduplicate notables¶

Our last step is to ensure that the Incident Review panel doesn't show us notables when we've found a match to our truth table which doesn't make sense to alert on. In the Searches, reports, alerts page, find the search Incident Review - Main and click Edit -> Edit Search.

By default it looks like this:

And we're just inserting this line after the base search

Congratulations!  ¶

¶

You should now have a significant reduction in duplicate notables

If something isn't working, make sure that the Saved Search is correctly outputting a lookup (which should have Global permissions), and ensure if you | inputlookup RIR-Deduplicate.csv you see all of the fields being returned as expected. If Incident Review is not working, something is wrong with the lookup or your edit to that search.

Extra Credit¶

If you utilize the Risk info field so you have a short and sweet risk_message, you can add another level of granularity to your truth table.

if you utilize risk_message for ALL of the event detail, it may be too granular and isn't as helpful for throttling.

This is especially useful if you are creating risk events from a data source with its own signatures like EDR, IDS, or DLP. Because the initial truth table only looks at score and correlation rule, if you have one correlation rule importing numerous signatures, you may want to alert when a new signature within that source fires.

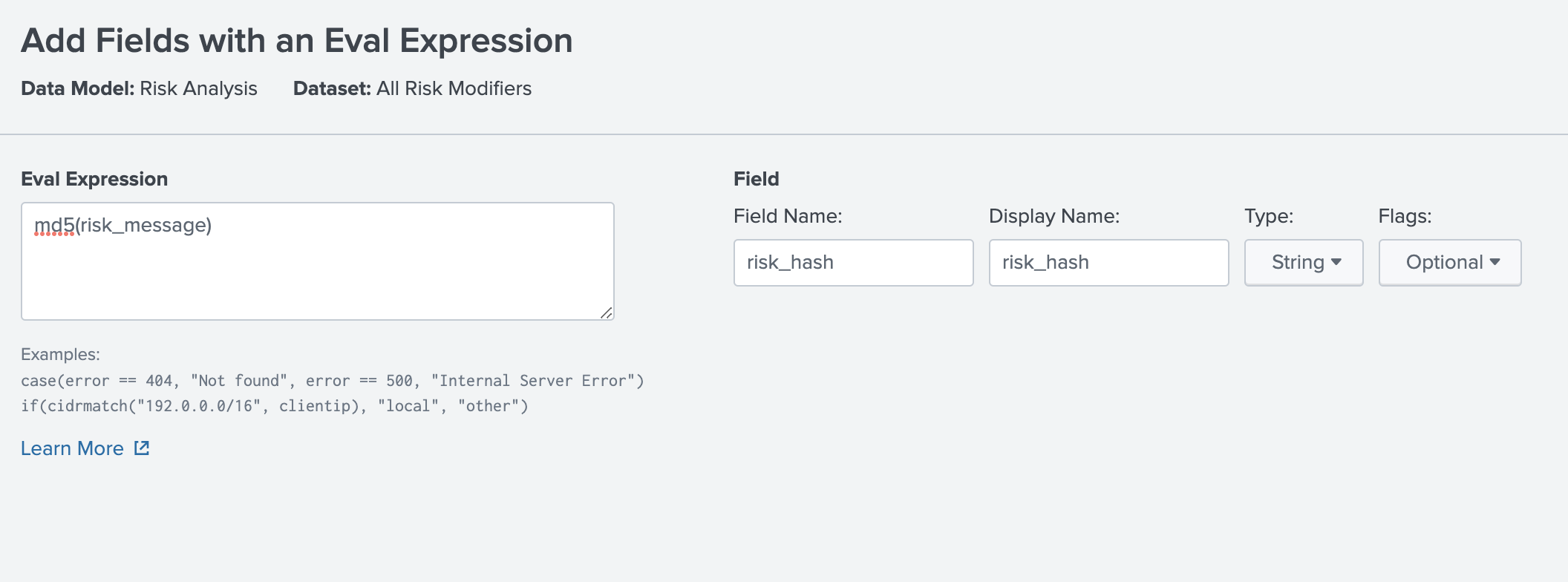

Create a calculated field¶

First, we'll create a new Calculated Field from risk_message in our Risk Datamodel called risk_hash with eval's md5() function, which bypasses the need to deal with special characters or other strangeness that might be in that field. If you haven't done this before - no worries - you just have to go to Settings -> Data Models -> Risk Data Model -> Edit -> Edit Acceleration and turn this off. Afterwards, you can Create New -> Eval Expression like this:

Don't forget to re-enable the acceleration

You may have to rebuild the data model from the Settings -> Data Model menu for this field to appear in your events.

Update SPL¶

Then we have to add this field into our Risk Incident Rules by adding this line to their initial SPL and ensure this field is retained downstream:

Now our Risk Notables will have a multi-value list of risk_message hashes. We must update our truth table to include a field called "matchHashes" - I've created a sample truth table here, but you must decide what is the proper risk appetite for your organization.

Next we'll edit the Saved Search we created above to include the new fields and logic:

Voila! We now ensure that our signature-based risk rule data sources will properly alert if there are interesting new events for that risk object.

Method II¶

This method is elegantly simple to ensure notables don't re-fire as earlier events drop off the rolling search window of your Risk Incident Rules. It does this by only firing if the latest risk event is from the past 70 minutes.

...

| stats latest(_indextime) AS latest_risk

| where latest_risk >= relative_time(now(),"-70m@m")

Credit to Josh Hrabar and James Campbell, this is brilliant. Thanks y'all!

Authors