Table of Contents

Overview ↵

Release notes for the Splunk Add-on for AWS¶

The release notes cover compatibility for software, Common Information Model (CIM) versions, and platforms.

Note

The field alias functionality is compatible with version 5.0.3 and later of this add-on. Versions 5.0.3 and later do not support older field alias configurations. For more information about the field alias configuration change, see Splunk Enterprise Release Notes.

Version 8.1.0 (latest)¶

Version 8.1.0 of the Splunk Add-on for Amazon Web Services was released

on

Upgrade guide for v8.1.0

If you are upgrading a Splunk Add-on for AWS version lower than 7.1.0, first upgrade to 7.11.0, and then upgrade to v8.1.0.

Compatibility¶

Version 8.1.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.3.x,9.4.x, 10.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 8.1.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Added support of new sourcetype ocsf:aws:securityhub:finding for Security Hub OCSF schema

Upgrade¶

- Before upgrading to v8.1.0, follow upgrade guide.

Fixed issues¶

Version 8.1.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 8.1.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 8.1.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 8.0.0¶

Version 8.0.0 of the Splunk Add-on for Amazon Web Services was released

on

Upgrade guide for v8.0.0

If you are upgrading a Splunk Add-on for AWS version lower than 7.1.0, first upgrade to 7.11.0, and then upgrade to v8.0.0.

Compatibility¶

Version 8.0.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.3.x,9.4.x, 10.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 8.0.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Deprecated the support for python3.7 version.

- Security fixes.

Upgrade¶

- Before upgrading to v8.0.0, follow upgrade guide.

Fixed issues¶

Version 8.0.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 8.0.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 8.0.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.11.0¶

Version 7.11.0 of the Splunk Add-on for Amazon Web Services was released

on

Compatibility¶

Version 7.11.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.2.x, 9.3.x, 9.4.x, 10.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.11.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Enhanced the input execution of SQS input to improve the performance. Added

SQS Max Threadsparameter inConfiguration > Add-on Global Settingspage. For more information, see Add-on Global Settings. - Improved

src_typeanddest_typeCIM field extractions foraws:securityhub:findingsourcetype

Fixed issues¶

Version 7.11.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.11.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.11.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.10.0¶

Version 7.10.0 of the Splunk Add-on for Amazon Web Services was released

on

Compatibility¶

Version 7.10.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.1.x, 9.2.x, 9.3.x, 9.4.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.10.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Enhanced CIM support for the

aws:cloudtrailsourcetype - Fixed security vulnerability in babel/runtime

CIM model changes¶

See the following CIM model changes between 7.9.1 and 7.10.0:

| Sourcetype | eventName | Previous CIM model | New CIM model |

|---|---|---|---|

aws:cloudtrail |

DetachInternetGateway | Change.Network_Changes | Change.All_Changes |

aws:cloudtrail |

GenerateCredentialReport | Change.Account_Management | Change.All_Changes |

aws:cloudtrail |

DescribeLogStreams, DescribeLoggingConfiguration, DescribeNatGateways, DescribeNetworkAcls, DescribeNetworkInterfaces, DescribeNotebookInstance, DescribeOrganizationConfiguration, DescribeRepositories, DescribeReservedDBInstances, DescribeReservedInstances, DescribeReservedInstancesListings, DescribeRouteTables, DescribeRules, DescribeSecurityGroupRules, DescribeSecurityGroups, DescribeSnapshots, DescribeStacks, DescribeStream, DescribeSubnets, DescribeTable, DescribeTargetGroups, DescribeUserProfiles, DescribeVolumes, DescribeVpcEndpointServicePermissions, DescribeVpcEndpoints, DescribeVpcs, DescribeVpnConnections, DescribeWorkspaceDirectories, DescribeWorkspaces, DriverExecute, EnableControl, Error_GET, GetAccessPointPolicy, GetAccountPasswordPolicy, GetAccountPublicAccessBlock, GetAdminScope, GetAlternateContact, GetContactInformation | - | Change.All_Changes |

Field changes¶

| Sourcetype | eventName | Added fields | Removed fields |

|---|---|---|---|

aws:cloudtrail |

DescribeLogStreams, DescribeRules, DescribeStacks | object_id, object, action, object_path, user, status, object_attrs | |

aws:cloudtrail |

DescribeLoggingConfiguration, DescribeTable | object_id, object, change_type, action, object_path, user, status, object_attrs | |

aws:cloudtrail |

DescribeNatGateways, DescribeNetworkAcls, DescribeNetworkInterfaces, DescribeReservedInstancesListings, DescribeRouteTables, DescribeSecurityGroupRules, DescribeSecurityGroups, DescribeSnapshots, DescribeSubnets, DescribeVolumes, DescribeVpcEndpointServicePermissions, DescribeVpcEndpoints, DescribeVpcs, DescribeVpnConnections, DetachInternetGateway, GenerateCredentialReport, GetAccessPointPolicy, GetAccountPublicAccessBlock | object_id, object, action, user, status, object_attrs | |

aws:cloudtrail |

DescribeNotebookInstance, DescribeTargetGroups | object_id, object, action, status, object_attrs | |

aws:cloudtrail |

DescribeOrganizationConfiguration, DescribeStream, DescribeUserProfiles, DescribeWorkspaceDirectories, DescribeWorkspaces, GetAdminScope, GetAlternateContact | object_id, object, change_type, action, user, status, object_attrs | |

aws:cloudtrail |

DescribeRepositories | object_id, object, action, object_path, status, object_attrs | |

aws:cloudtrail |

DescribeReservedDBInstances, DescribeReservedInstances | action, status, object_attrs | |

aws:cloudtrail |

DriverExecute | object_id, object, action, object_path, result, user, status, object_attrs | |

aws:cloudtrail |

EnableControl | object_id, object, change_type, action, object_path, result, user, status, object_attrs | |

aws:cloudtrail |

Error_GET | object_id, object, action, result, status, object_attrs | |

aws:cloudtrail |

GetAccountPasswordPolicy | object_id, object, user, object_attrs | |

aws:cloudtrail |

GetContactInformation | change_type, action, status, object_attrs |

Fixed issues¶

Version 7.10.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.10.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.10.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.9.1¶

Version 7.9.1 of the Splunk Add-on for Amazon Web Services was released

on

Compatibility¶

Version 7.9.1 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.1.x, 9.2.x, 9.3.x, 9.4.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.9.1 of the Splunk Add-on for AWS version contains the following new and changed features:

- Addressed the potential issue of conf files being reloaded

- Security fixes

Fixed issues¶

Version 7.9.1 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.9.1 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.9.1 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.9.0¶

Compatibility¶

Version 7.9.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.1.x, 9.2.x, 9.3.x, 9.4.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.9.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Upgraded the Splunk SDK to the latest version, ensuring compatibility with future cloud-based deployments

- Enhanced CIM support for the

aws:cloudtrailsourcetype

CIM model changes¶

See the following CIM model changes between 7.8.0 and 7.9.0

| Sourcetype | eventName | Previous CIM model | New CIM model |

|---|---|---|---|

aws:cloudtrail |

AttachRolePolicy, CreatePolicy, CreateServiceLinkedRole | Change.Account_Management | Change.All_Changes |

aws:cloudtrail |

CreateVpc, DeleteDBSubnetGroup, DeleteVpcEndpoints | Change.Network_Changes | Change.All_Changes |

aws:cloudtrail |

AddMemberToGroup, AdminCreateUser, AdminGetUser, AdminResetUserPassword | Change.Account_Management | |

aws:cloudtrail |

BatchGetImage, Decrypt, DescribeAccessPoints, DescribeAccountSubscription, DescribeAddresses, DescribeBackupPolicy, DescribeCluster, DescribeContinuousBackups, DescribeCustomerGateways, DescribeDBClusterSnapshotAttributes, DescribeDBClusterSnapshots, DescribeDBClusters, DescribeDBEngineVersions, DescribeDBInstances, DescribeDBSecurityGroups, DescribeDBSnapshotAttributes, DescribeDBSnapshots, DescribeDBSubnetGroups, DescribeDRTAccess, DescribeDeliveryStream, DescribeDirectories, DescribeEndpoint, DescribeFileSystemPolicy, DescribeFileSystems, DescribeFleets, DescribeHosts, DescribeHub, DescribeImages, DescribeInstances, DescribeInternetGateways, DescribeJobs, DescribeKeyPairs, DescribeListeners, DescribeLoadBalancers, DescribeSecret, GetDomainPermissionsPolicy, GetSecretValue, GetSecurityConfigurations, ListOrganizationAdminAccounts | Change.All_Changes | |

aws:cloudtrail |

DescribeAddresses, DescribeCustomerGateways, DescribeDBSecurityGroups, DescribeDBSubnetGroups, DescribeInternetGateways | Change.All_Changes |

Field changes¶

| Sourcetype | eventName | Added fields | Modified fields | Removed fields | v1 | v2 |

|---|---|---|---|---|---|---|

aws:cloudtrail |

AddMemberToGroup | user, change_type, object_id, status, action, src_user_type, src_user, object, object_attrs, src_user_name | object_category, tag, eventtype, tag::eventtype | user_name | unknown, , , | user, account,management,change, aws_cloudtrail_iam_change_acctmgmt, account,management,change |

aws:cloudtrail |

AdminCreateUser | change_type, object_id, status, action, object, object_attrs, src_user_name | object_category, tag, eventtype, user, tag::eventtype, user_name | unknown, , , digital_nomad,dev-swb-svc, , digital_nomad,dev-swb-svc | user, account,management,change, aws_cloudtrail_iam_change_acctmgmt, HIDDEN_DUE_TO_SECURITY_REASONS, account,management,change, HIDDEN_DUE_TO_SECURITY_REASONS | |

aws:cloudtrail |

AdminGetUser | change_type, object_id, status, action, object, object_attrs, src_user_name | object_category, tag, eventtype, user, tag::eventtype, user_name | unknown, , , digital_nomad,dev-swb-svc, , digital_nomad,dev-swb-svc | user, account,management,change, aws_cloudtrail_iam_change_acctmgmt, HIDDEN_DUE_TO_SECURITY_REASONS, account,management,change, HIDDEN_DUE_TO_SECURITY_REASONS | |

aws:cloudtrail |

AdminResetUserPassword | change_type, user, object_id, status, action, src_user_type, src_user, object, object_attrs, src_user_name | object_category, tag, eventtype, tag::eventtype, user_name | unknown, , , , ACOE-AWS-Developer | user, account,management,change, aws_cloudtrail_iam_change_acctmgmt, account,management,change, HIDDEN_DUE_TO_SECURITY_REASONS | |

aws:cloudtrail |

AssumeRole | object, src_user_name | object_category, change_type, user_type, user | unknown, EC2,STS, , | user, AAA,virtual computing, AssumedRole, AWSAccountAuditFunction-role-85lvmt4g | |

aws:cloudtrail |

AssumeRoleWithSAML | change_type | STS | AAA | ||

aws:cloudtrail |

AssumeRoleWithWebIdentity | change_type, src_user_id | STS, | AAA, accounts.google.com: |

||

aws:cloudtrail |

AttachRolePolicy | object_id, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user, action, user_name | unknown, account,management, aws_cloudtrail_iam_change_acctmgmt, account,management, , unknown, AWSReservedSSO_AWSAdministratorAccess_d8318c70c7f3047c | role, , aws_cloudtrail_change, , johndoe1@example.com, , johndoe1@example.com | |

aws:cloudtrail |

BatchGetImage | change_type, user, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | image, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

CreateAuthorizer | change_type, user, object_id, status, action, object, object_attrs | object_category, user_name | unknown, ACOE-AWS-Developer | authorizer, johndoe1@example.com | |

aws:cloudtrail |

CreateClientVpnEndpoint | user, object_id, status, action, object, object_attrs | object_category, change_type, user_name | unknown, EC2, AWSReservedSSO_AWSAdministratorAccess_e5ad140e18859391 | client-vpn-endpoint, network, johndoe1@example.com | |

aws:cloudtrail |

CreateConnection | change_type, user, result, object_id, status, action, object, object_attrs | object_category, user_name | unknown, AWSReservedSSO_AWSAdministratorAccess_d8318c70c7f3047c | connection, johndoe1@example.com | |

aws:cloudtrail |

CreateDBClusterSnapshot | change_type, object_id, status, action, object_path, object, object_attrs | object_category | unknown | cluster | |

aws:cloudtrail |

CreateDataChannel | user, change_type, status, action, object, object_attrs | object_category | unknown | service | |

aws:cloudtrail |

CreateLoadBalancer | object_id, status, action, object_path, object, object_attrs | object_category | unknown | load balancer | |

aws:cloudtrail |

CreateNamespace | change_type, object_id, status, action, object_path, object, object_attrs | object_category, user, user_name | unknown, , AWSReservedSSO_AWSAdministratorAccess_d8318c70c7f3047c | namespace, johndoe1@example.com, johndoe1@example.com | |

aws:cloudtrail |

CreatePolicy | object_path, object, object_attrs, object_id | object_category, tag, eventtype, tag::eventtype, user, action, user_name | unknown, account,management, aws_cloudtrail_iam_change_acctmgmt, account,management, , unknown, AWSReservedSSO_AWSAdministratorAccess_d8318c70c7f3047c | policy, , , , johndoe1@example.com, , johndoe1@example.com | |

aws:cloudtrail |

CreateServiceLinkedRole | object_id, status, action, object_path, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user, user_name | unknown, account,management, aws_cloudtrail_iam_change_acctmgmt, account,management, , AWSReservedSSO_AWSAdministratorAccess_e5ad140e18859391 | service linked role, , , , johndoe1@example.com, johndoe1@example.com | |

aws:cloudtrail |

CreateSnapshot | object_id, status, action, object, object_attrs | object_category, change_type, user | unknown, EC2, | snapshot, virtual computing, PrismaCloudRole-member | |

aws:cloudtrail |

CreateVpc | object_id, status, action, object, object_attrs | object_category, change_type, tag, eventtype, tag::eventtype, user | unknown, EC2, network, aws_cloudtrail_notable_network_events, network, | vpc, virtual computing, , , , PrismaCloudRole-member | |

aws:cloudtrail |

CreateWorkgroup | change_type, object_id, status, action, object, object_attrs | object_category, user, user_name | unknown, , AWSReservedSSO_AWSAdministratorAccess_d8318c70c7f3047c | workgroup, johndoe1@example.com, johndoe1@example.com | |

aws:cloudtrail |

Decrypt | change_type, user, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user_name | unknown, , , , ACOE-AWS-Developer | ciphertext, change, aws_cloudtrail_change, change, johndoe1@example.com | |

aws:cloudtrail |

MonitorInstances, DescribeNatGateways, DescribeSubnets, DeleteNetworkInterface, DeleteAccessKey, DescribeNetworkAcls, DescribeEgressOnlyInternetGateways, DescribeNetworkInterfaces, DescribeSecurityGroups, DescribeVpcEndpointServiceConfigurations, DescribeVpcPeeringConnections, DescribeRouteTables, DescribeVpcEndpoints, DescribeVpcs, DescribeVpnGateways | change_type | EC2 | virtual computing | ||

aws:cloudtrail |

DeleteDBSubnetGroup | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user, user_name | unknown, network, aws_cloudtrail_notable_network_events, network, , ACOE-AWS-SRE | subnet_group, , , , johndoe1@example.com, johndoe1@example.com | |

aws:cloudtrail |

DeleteVpcEndpoints | user, object_id, status, action, object, object_attrs | object_category, change_type, tag, eventtype, tag::eventtype | unknown, EC2, network, aws_cloudtrail_notable_network_events, network | vpc endpoint, virtual computing, , , | |

aws:cloudtrail |

DescribeAccessPoints | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | access point, change, aws_cloudtrail_change, change, AWSServiceRoleForConfig | |

aws:cloudtrail |

DescribeAccountSubscription | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, user, tag::eventtype | unknown, , , , | aws account, change, aws_cloudtrail_change, AWSServiceRoleForConfig, change | |

aws:cloudtrail |

DescribeAddresses | object_id, status, action, object, object_attrs | object_category, change_type, tag, eventtype, tag::eventtype, user | unknown, EC2, , , , | AWS account, virtual computing, change, aws_cloudtrail_change, change, CloudHealthRole,AWSServiceRoleForTrustedAdvisor | |

aws:cloudtrail |

DescribeBackupPolicy | user, change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | Elastic filesystem, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeCluster | user, change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | EKS cluster, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeConfigurationSettings | user, change_type, object_id, status, action, object, object_attrs | object_category, eventtype | unknown, | application, aws_cloudtrail_change | |

aws:cloudtrail |

DescribeContinuousBackups | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | table, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeCustomerGateways | status, action | object_category, tag, eventtype, user, tag::eventtype, change_type | unknown, , , , , EC2 | VPN customer gateways, change, aws_cloudtrail_change, AWSServiceRoleForConfig, change, network | |

aws:cloudtrail |

DescribeDBClusterSnapshotAttributes | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | DB cluster snapshot attribute, change, aws_cloudtrail_change, change, sgs-cloud-security-audit,AWSServiceRoleForConfig | |

aws:cloudtrail |

DescribeDBClusterSnapshots | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | DB cluster snapshot, change, aws_cloudtrail_change, change, AWSServiceRoleForConfig | |

aws:cloudtrail |

DescribeDBClusters | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | DB cluster, change, aws_cloudtrail_change, change, AWSServiceRoleForConfig | |

aws:cloudtrail |

DescribeDBEngineVersions | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user, user_name | unknown, , , , , ACOE-AWS-SRE | DB engine, change, aws_cloudtrail_change, change, johndoe1@example.com, johndoe1@example.com | |

aws:cloudtrail |

DescribeDBInstances | change_type, status, object_attrs, action | object_category, tag, eventtype, tag::eventtype, object_id, object | unknown, , , , , rds.amazonaws.com | DB instance, change, aws_cloudtrail_change, change, TestAccess, DB instance,TestAccess | |

aws:cloudtrail |

DescribeDBSecurityGroups | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | DB security group, change, aws_cloudtrail_change, change, CloudHealthRole,AWSServiceRoleForTrustedAdvisor,AWSServiceRoleForConfig | |

aws:cloudtrail |

DescribeDBSnapshotAttributes | user, change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | DB snapshot attribute, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeDBSnapshots | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | DB snapshot, change, aws_cloudtrail_change, change, IVP-Backup-EucDev-iamRoleForBackup-yi08ESmGB1MV | |

aws:cloudtrail |

DescribeDBSubnetGroups | change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | DB Subnet Group, change, aws_cloudtrail_change, change, CloudHealthRole,AWSServiceRoleForConfig | |

aws:cloudtrail |

DescribeDRTAccess | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | Amazon S3 log buckets, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeDeliveryStream | user, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, change_type | unknown, , , , stream | Delivery stream, change, aws_cloudtrail_change, change, data | |

aws:cloudtrail |

DescribeDirectories | change_type, status, action, object, object_attrs | object_category, tag, eventtype, user, tag::eventtype | unknown, , , , | directory, change, aws_cloudtrail_change, PrismaCloudRole-member, change | |

aws:cloudtrail |

DescribeEndpoint | user, change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | endpoint, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeFileSystemPolicy | user, change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | Filesystem Policy, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeFileSystems | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | Elastic filesystem, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeFleets | user, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, change_type | unknown, , , , EC2 | fleet, change, aws_cloudtrail_change, change, virtual computing | |

aws:cloudtrail |

DescribeHosts | user, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, change_type | unknown, , , , EC2 | Dedicated Host, change, aws_cloudtrail_change, change, virtual computing | |

aws:cloudtrail |

DescribeHub | change_type, status, action, object, object_attrs | object_category, tag, eventtype, user, tag::eventtype | unknown, , , , | Security Hub, change, aws_cloudtrail_change, PrismaCloudRole-member, change | |

aws:cloudtrail |

DescribeImages | status, object, object_attrs, action | object_category, tag, eventtype, user, tag::eventtype, change_type | unknown, , , , , EC2 | Image, change, aws_cloudtrail_change, CloudHealthRole, change, virtual computing | |

aws:cloudtrail |

DescribeInstances | status, object_attrs, action | object_category, tag, eventtype, tag::eventtype, change_type, object | object_id | unknown, , , , EC2, ec2.amazonaws.com | Instance, change, aws_cloudtrail_change, change, virtual computing, Instance |

aws:cloudtrail |

DescribeInternetGateways | user, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, change_type | unknown, , , , EC2 | internet gateway, change, aws_cloudtrail_change, change, virtual computing | |

aws:cloudtrail |

DescribeJobs | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | DRS batch jobs, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

DescribeKeyPairs | object_id, status, action, object, object_attrs | object_category, change_type, tag, eventtype, tag::eventtype, user | unknown, EC2, , , , | key pairs, AAA, change, aws_cloudtrail_change, change, PrismaCloudRole-member | |

aws:cloudtrail |

DescribeListeners | status, object, object_attrs, action | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | listeners, change, aws_cloudtrail_change, change, PrismaCloudRole-member | |

aws:cloudtrail |

DescribeLoadBalancers | object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user | unknown, , , , | load balancers, change, aws_cloudtrail_change, change, PrismaCloudRole-member | |

aws:cloudtrail |

DescribeSecret | change_type, user, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | secret, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

GetDomainPermissionsPolicy | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | policy, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

GetSecretValue | user, change_type, object_id, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype, user_name | unknown, , , , AWSReservedSSO_AWSAdministratorAccess_d8318c70c7f3047c | secret, change, aws_cloudtrail_change, change, johndoe1@example.com | |

aws:cloudtrail |

GetSecurityConfigurations | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | security configurations, change, aws_cloudtrail_change, change | |

aws:cloudtrail |

ListOrganizationAdminAccounts | user, change_type, status, action, object, object_attrs | object_category, tag, eventtype, tag::eventtype | unknown, , , | admin accounts, change, aws_cloudtrail_change, change |

Fixed issues¶

Version 7.9.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.9.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.9.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.8.0¶

Compatibility¶

Version 7.8.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.1.x, 9.2.x, 9.3.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API, S3 Event Notifications using EventBridge), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.8.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Provided support for data collection of multiple CSV files inside ZIP compression in Generic S3 input.

- Provided support for input creation, listing, and deletion in CLI tool for all the inputs.

- Provided support for parsing Amazon S3 Event Notifications using Amazon EventBridge in SQS-Based S3 input.

- Provided support for single space delimited files data collection in SQS-Based S3 and Generic S3 inputs.

- Enhanced the data pulling logic in Inspector (v2) input. Findings with “Resolved” state are also collected.

- For SQS-Based S3 input, enhanced the SNS signature validation and deprecated the

SNS message max ageparameter.

Fixed issues¶

Version 7.8.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.8.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.8.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.7.1¶

Compatibility¶

Version 7.7.1 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.1.x, 9.2.x, 9.3.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.7.1 of the Splunk Add-on for AWS version contains the following new and changed features:

- Fixed the OS compatibility issue for the Security Lake input.

Fixed issues¶

Version 7.7.1 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.7.1 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.7.1 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.7.0¶

Compatibility¶

Version 7.7.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 9.1.x, 9.2.x, 9.3.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.7.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Provided support for metric index in the VPC Flow Logs input.

- The fields

bytesandpacketsare considered as metric measures and rest of the fields as dimensions. - The events having

log_statusfield asNODATAorSKIPDATAare not ingested in the metric index as they don’t contain any values for metric measures. - Enhanced the time window calculation mechanism for CloudWatch Logs input data collection. Added

Query Window Sizeparameter in the UI. For more information, see CloudWatch Log inputs. - Fixed the data miss issue in the Generic S3 input.

- Provided compatibility for IPv6.

- Security fixes.

Fixed issues¶

Version 7.7.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.7.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.7.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.6.0¶

Note

Billing (Legacy) input is deprecated in add-on version 7.6.0. Configure Billing (Cost and Usage Report) inputs to collect billing data.

Compatibility¶

Version 7.6.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x, 9.1.x, 9.2.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Transit Gateway Flow Logs, Billing Cost and Usage Report, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.6.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Security Lake input now supports OCSF schema v1.1 for AWS log sources Version 2. For more information, see the OCSF source identification section of the Open Cybersecurity Schema Framework (OCSF) topic in the AWS documentation.

- Deprecated Billing (Legacy) input. Configure Billing (Cost and Usage Report) input to collect billing data. For more information, see Cost and Usage Report inputs.

- Provided support of Transit Gateway Flow Logs.

- The default format of logs in text file format is supported.

- SQS-based-S3 input type is supported in the pull-based mechanim.

- Transit Gateway Flow Logs can also be collected through push-based mechanism.

- Enhanced the input execution of CloudWatch input to improve the performance. Added

CloudWatch Max Threadsparameter inAdd-on Global Settingspage. For more information, see Add-on Global Settings. - CIM fields

protocol,transport, andprotocol_full_nameupdated as per CIM best practices. This change impacts the following sourcetypes: aws:cloudwatchlogs:vpcflowaws:cloudtrailaws:transitgateway:flowlogs- Security fixes.

- Minor bug fixes.

Fixed issues¶

Version 7.6.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.6.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.6.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.5.1¶

Compatibility¶

Version 7.5.1 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x, 9.1.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.5.1 of the Splunk Add-on for AWS version contains the following new and changed features:

- Provided support for Python 3.9.

- Minor UI improvements for a more streamlined upgrade experience.

Fixed issues¶

Version 7.5.1 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.5.1 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.5.1 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.5.0¶

Version 7.5.0 of the Splunk Add-on for Amazon Web Services was released on April 2, 2024.

Compatibility¶

Version 7.5.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x, 9.1.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.5.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Provided support of Cloudtrail Lake Input.

- Provided support of Assume role in Cloudwatch Logs Input.

- Enhanced the CIM support for

aws:cloudwatch:guarddutyandaws:cloudwatchlogs:guarddutysourcetypes. - The CIM field

mitre_technique_idwas removed in this release because: a. The existing values were found inaccurate and misleading. b. The vendor does not provide the mitre technique IDs in the GuardDuty events. - The fields

src_typeanddest_typewere corrected or added for all the GuardDuty events. - The

transportfield got corrected for a few events. - The

signatureandsignature_idfield got corrected for the GuardDuty events. - The

srcfield was added or corrected for certain events. -

See the following table to review the data model information based on the

service.actionTypefield in your GuardDuty events:| Alerts data model | Intrusion detection data model | |------------------------------------------------------------------------------------------------|----------------------------------------------------| | `AWS_API_CALL`, `KUBERNETES_API_CALL`, `RDS_LOGIN_ATTEMPT` OR `service.actionType` is `null`. | `NETWORK_CONNECTION`, `DNS_REQUEST`, `PORT_PROBE` | -

Enhanced data collection for Metadata input. Previously, if input is configured with multiple regions then input was collecting the global services data from every configured region which was causing the data duplication in the single input execution. In this version, this data duplication for global services is removed by collecting data from any one region if multiple regions are configured in the same input.

- Minor bug fixes.

Fixed issues¶

Version 7.5.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.5.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.5.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.4.1¶

Version 7.4.1 of the Splunk Add-on for Amazon Web Services was released on February 21, 2024.

Note

Starting in version 7.1.0 of the Splunk Add-on for AWS, the file-based checkpoint mechanism was migrated to the Splunk KV store for Billing Cost and Usage Report, CloudWatch Metrics, and Incremental S3 inputs. The inputs must be turned off whenever you restart the Splunk software. Otherwise, it results in data duplication against your already configured inputs.

Note

Version 7.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Security Lake. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Security Lake as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Security Lake before upgrading the Splunk Add-on for AWS to version 7.0.0 or later to avoid any data duplication and discrepancy issues.

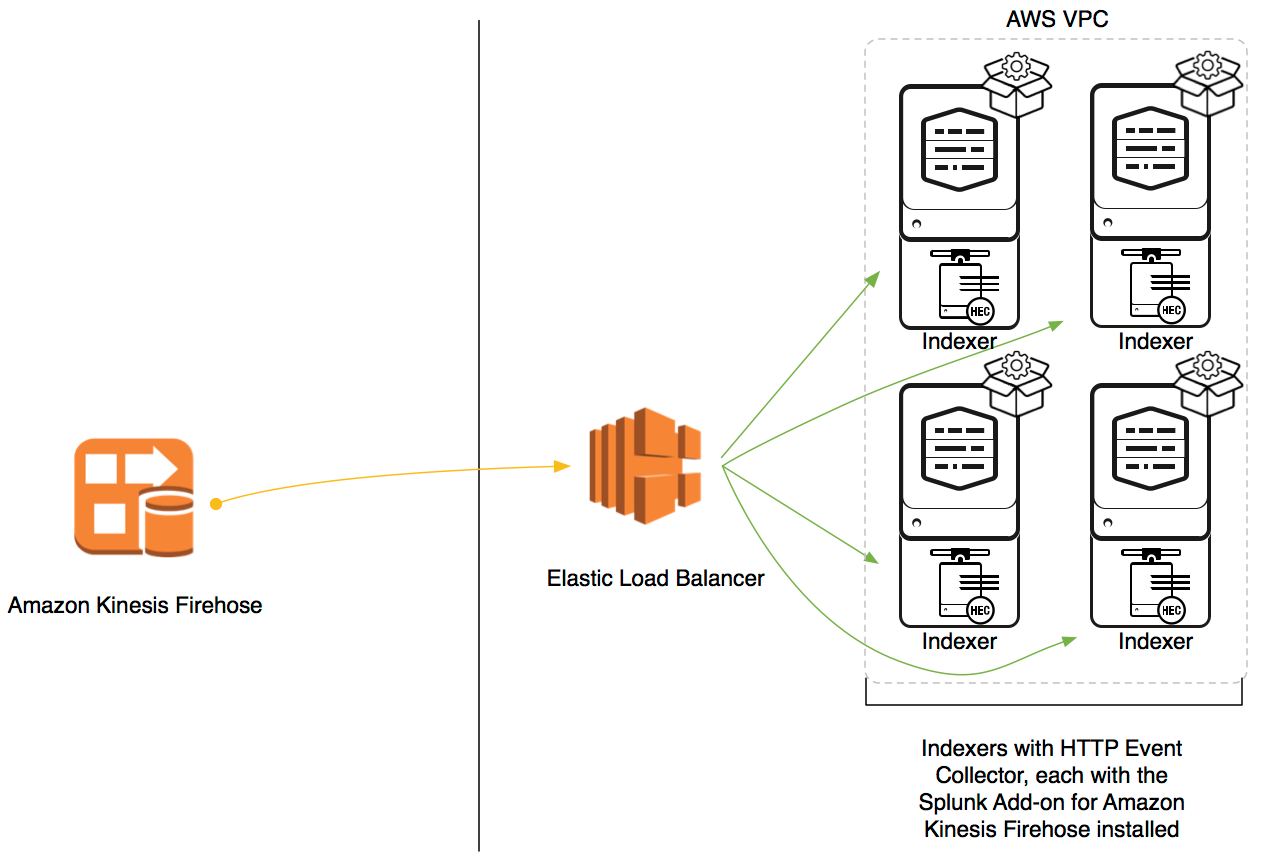

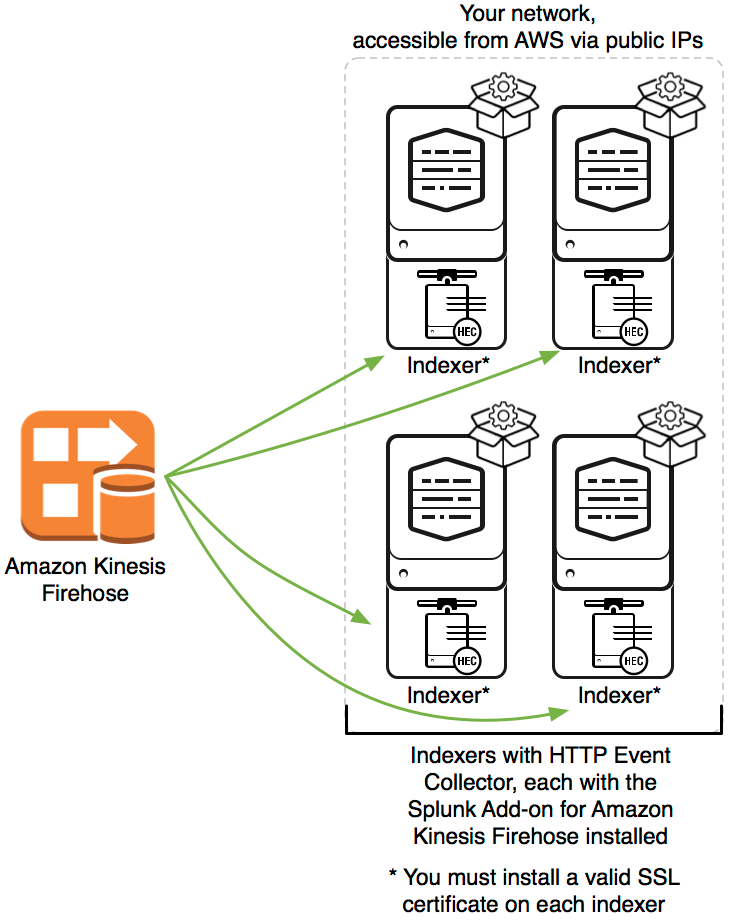

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 7.4.1 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x, 9.1.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.4.1 of the Splunk Add-on for AWS version contains the following new and changed features:

- Fixed an API loading issue for the Metadata Input.

Fixed issues¶

Version 7.4.1 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.4.1 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.4.1 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.4.0¶

Version 7.4.0 of the Splunk Add-on for Amazon Web Services was released on December 21, 2023.

Note

Starting in version 7.1.0 of the Splunk Add-on for AWS, the file-based checkpoint mechanism was migrated to the Splunk KV store for Billing Cost and Usage Report, CloudWatch Metrics, and Incremental S3 inputs. The inputs must be turned off whenever you restart the Splunk software. Otherwise, it results in data duplication against your already configured inputs.

Note

Version 7.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Security Lake. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Security Lake as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Security Lake before upgrading the Splunk Add-on for AWS to version 7.0.0 or later to avoid any data duplication and discrepancy issues.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 7.4.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.4.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Added decoding support for parsing Kinesis Firehose error data. This significant improvement eliminates the necessity of employing a Lambda function for decoding purposes. Consequently, the use of Lambda for the re-ingestion of failed events from S3 is no longer required, streamlining the process and reducing complexity.

- Enhanced UI experience features.

- Minor bug fixes.

Fixed issues¶

Version 7.4.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.4.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.4.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.3.0¶

Version 7.3.0 of the Splunk Add-on for Amazon Web Services was released on November 10, 2023.

Note

Starting in version 7.1.0 of the Splunk Add-on for AWS, the file-based checkpoint mechanism was migrated to the Splunk KV store for Billing Cost and Usage Report, CloudWatch Metrics, and Incremental S3 inputs. The inputs must be turned off whenever you restart the Splunk software. Otherwise, it results in data duplication against your already configured inputs. Turning off inputs is not supported for Kinesis inputs.

Version 7.3.0 - Inspector - InspectorV2 - Config Rules - Cloudwatch Logs - Kinesis

Note

Version 7.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Security Lake. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Security Lake as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Security Lake before upgrading the Splunk Add-on for AWS to version 7.0.0 or later to avoid any data duplication and discrepancy issues.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 7.3.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.3.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- The file checkpoint mechanism was migrated to the Splunk KV store for Inspector, InspectorV2, ConfigRule, CloudwatchLogs and Kinesis inputs.

- Updated existing Health Check Dashboards to enhance troubleshooting and performance monitoring. See AWS Health Check Dashboards for more information.

Fixed issues¶

Version 7.3.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.3.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.3.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.2.0¶

Version 7.2.0 of the Splunk Add-on for Amazon Web Services was released on October 17, 2023.

Compatibility¶

Version 7.2.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.2.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Removed the AWS Lambda function dependency for the

aws:cloudwatchlogs:vpcflowsourcetype. - Added support to fetch logs from AWS directory structures using the CloudTrail Incremental S3 input.

- Enhanced throttle support for the Metadata input to mitigate throttling and limit errors.

- Added the SNS message Max Age parameter to the SQS-based S3 input. This can be used to improve the efficiency of your data collection of messages within specified age limits.

Fixed issues¶

Version 7.2.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.2.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.2.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.1.0¶

Version 7.1.0 of the Splunk Add-on for Amazon Web Services was released on July 25, 2023.

Note

Starting in version 7.1.0 of the Splunk Add-on for AWS, the file-based checkpoint mechanism was migrated to the Splunk KV store for Billing Cost and Usage Report, CloudWatch Metrics, and Incremental S3 inputs. The inputs must be turned off whenever you restart the Splunk software. Otherwise, it results in data duplication against your already configured inputs.

Note

Version 7.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Security Lake. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Security Lake as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Security Lake before upgrading the Splunk Add-on for AWS to version 7.0.0 or later to avoid any data duplication and discrepancy issues.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 7.1.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.1.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Added support for the following services for the AWS metadata input:

- EKS

- ElasticCache

- EMR

- GuardDuty

- Network Firewall

- Route 53

- WAF

- WAF v2

- Enhancements have been made to the AWS metadata input for the following services:

- CloudFront

- EC2

- ELB

- IAM

- Kinesis Data Firehose

- VPC

- The file checkpoint mechanism was migrated to the Splunk KV store for Billing Cost and Usage Report, CloudWatch Metrics, and Incremental S3 inputs.

Fixed issues¶

Version 7.1.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.1.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.1.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 7.0.0¶

Version 7.0.0 of the Splunk Add-on for Amazon Web Services was released on May 18th, 2023.

Note

Version 7.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Security Lake. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Security Lake as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Security Lake before upgrading the Splunk Add-on for AWS to version 7.0.0 or later to avoid any data duplication and discrepancy issues.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into your Splunk platform deployment.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 7.0.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.2.x, 9.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, EventBridge (CloudWatch API), Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, AWS Security Hub findings, and Amazon Security Lake events |

New features¶

Version 7.0.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Data input support for the Amazon Security Lake service. Users are now be able to ingest security events from Amazon Security Lake, normalized to the Open Cybersecurity Schema Framework (OCSF) schema. The Amazon Security Lake service makes AWS security events available as multi-event Apache Parquet objects in an S3 bucket. Each object has a corresponding SQS notification, when ready for download. Open Cybersecurity Schema Framework (OCSF) is an open-source project, delivering an extensible framework for developing schemas, along with a vendor-agnostic core security schema.

Fixed issues¶

Version 7.0.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 7.0.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 7.0.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 6.4.0¶

Version 6.4.0 of the Splunk Add-on for Amazon Web Services was released on April 19th, 2023.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into Splunk.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 6.4.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.1.x, 8.2.x, 9.0.x |

| CIM | 5.1.1 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Security Hub findings events |

New features¶

Version 6.4.0 of the Splunk Add-on for AWS version contains the following new and changed features:

- Enhanced CIM support of

aws:securityhub:findingssource enter order to support the new event format. - Fixed CIM extractions for the app and user fields and added

extractions for user_name in

aws:securityhub:findingssource type.

Note

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues. Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS. If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Fixed issues¶

Version 6.4.0 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 6.4.0 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 6.4.0 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 6.3.2¶

Version 6.3.2 of the Splunk Add-on for Amazon Web Services was released on February 23, 2023.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into Splunk.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 6.3.2 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.1.x, 8.2.x, 9.0.x |

| CIM | 4.20 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Security Hub findings events |

New features¶

Version 6.3.2 of the Splunk Add-on for AWS version contains the following new and changed features:

- Security related bug fixes. No new features added.

Note

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues. Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS. If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Fixed issues¶

Version 6.3.2 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 6.3.2 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 6.3.2 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 6.3.1¶

Version 6.3.1 of the Splunk Add-on for Amazon Web Services was released on January 23, 2022.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into Splunk.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 6.3.1 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.1.x, 8.2.x, 9.0.x |

| CIM | 4.20 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Security Hub findings events |

Note

The field alias functionality is compatible with the current version of this add-on. The current version of this add-on does not support older field alias configurations.

New features¶

Version 6.3.1 of the Splunk Add-on for AWS version contains the following new and changed features:

- Returned support for the AWS VPC default log format (v1-v2 fields only)

- Fix for generic S3 upgrade issue

Note

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues. Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS. If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Fixed issues¶

Version 6.3.1 of the Splunk Add-on for Amazon Web Services fixes the following, if any, issues:

Known issues¶

Version 6.3.1 of the Splunk Add-on for Amazon Web Services has the following, if any, known issues:

Third-party software attributions¶

Version 6.3.1 of the Splunk Add-on for Amazon Web Services incorporates the following third-party libraries:

Third-party software attributions for the Splunk Add-on for Amazon Web Services

Version 6.3.0¶

Version 6.3.0 of the Splunk Add-on for Amazon Web Services was released on December 12, 2022.

Note

Version 6.0.0 of the Splunk Add-on for AWS includes a merge of all the capabilities of the Splunk Add-on for Amazon Kinesis Firehose. Configure the Splunk Add-on for AWS to ingest across all AWS data sources for ingesting AWS data into Splunk.

If you use both the Splunk Add-on for Amazon Kinesis Firehose as well as the Splunk Add-on for AWS on the same Splunk instance, you must uninstall the Splunk Add-on for Amazon Kinesis Firehose after upgrading the Splunk Add-on for AWS to version 6.0.0 or later to avoid any data duplication and discrepancy issues.

Data that you previously onboarded through the Splunk Add-on for Amazon Kinesis Firehose is searchable, and your existing searches are compatible with version 6.0.0 of the Splunk Add-on for AWS.

If you are not currently using the Splunk Add-on for Amazon Kinesis Firehose, but plan to use it in the future, download and configure version 6.0.0 or later of the Splunk Add-on for AWS instead of the Splunk Add-on for Amazon Kinesis Firehose.

Compatibility¶

Version 6.3.0 of the Splunk Add-on for Amazon Web Services is compatible with the following software, CIM versions, and platforms:

| Splunk platform versions | 8.1.x, 8.2.x, 9.0.x |

| CIM | 4.20 and later |

| Supported OS for data collection | Platform independent |

| Vendor products | Amazon Web Services CloudTrail, CloudWatch, CloudWatch Logs, Config, Config Rules, Inspector Classic, Inspector, Kinesis, S3, VPC Flow Logs, Billing services, Metadata, SQS, SNS, AWS Identity and Access Management (IAM) Access Analyzer, and AWS Security Hub findings events |

Note

The field alias functionality is compatible with the current version of this add-on. The current version of this add-on does not support older field alias configurations.

New features¶

Version 6.3.0 of the Splunk Add-on for AWS version contains the following new and changed features:

Note

Starting in version 6.3.0 of the Splunk Add-on for AWS, the VPC Flow log extraction format has been updated to include v3-v5 fields. Before upgrading to versions 6.3.0 and higher of the Splunk Add-on for AWS, Splunk platform deployments ingesting AWS VPC Flow Logs must update the log format in AWS VPC to include v3-v5 fields to ensure successful field extractions. For more information on updating the log format in AWS VPC, see the Configure VPC Flow Logs inputs for the Splunk Add-on for AWS topic in this manual.

- Expanded support for VPC FlowLogs, sourcetype

aws:cloudwatchlogs:vpcflow:- Ingestion of VPC flow logs through SQS-Based S3.

- Support for the parsing of v3-v5 fields defined by AWS for VPC flow logs for both the Splunk defined custom log format and the select all log format.

- Validation of the native delivery of VPC Flow Logs through Kinesis Firehose.

- The addition of an

iam_list_policyAPI to the Metadata input to fetch data related to:- Fetch all policies related to IAM using

iam:ListPolicy. - Fetch permissions data using

iam:GetPolicyVersion. - To link the users with policy, the following policies

iam:ListUserPoliciesandiam:ListAttachedUserPolicieswere added toIam_usersdata.

- Fetch all policies related to IAM using

- Support for the ingestion of

OversizedChangeNotificationevents through the Config input. - Expanded support for Network Load Balancer (NLB) access logs. The

new field

elb_typewas created to distinguish between ELB, ALB, and NLB access logs. - UI input page support to turn on/turn off CSV parsing and custom delimiter definition for Generic S3 & SQS-based S3.